It is now crucial to address the bias issue in machine learning models due to their growing application in various fields.

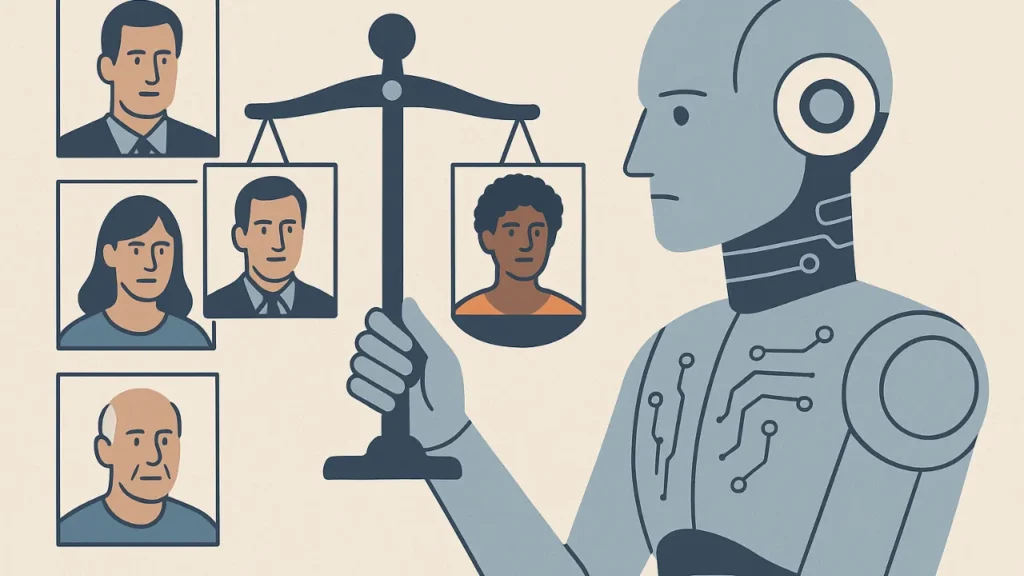

In classification tasks, for example, where models are trained to classify data into different categories, this problem can manifest in a variety of ways, including racial, gender, or socioeconomic biases that result in unfair outcomes in decision-making processes.

Researchers have created a variety of methods and approaches to lessen the bias in machine learning models in order to address this problem.

So, what is bias mitigation in AI?

The strategies and tactics used to lessen or completely eradicate unjust biases in AI systems are referred to as bias mitigation. Unbalanced algorithms, biased training data, and other sources that represent systemic or human biases can all contribute to these biases.

Making sure AI models are just, equal, and do not discriminate against any particular group is the aim.

To avoid AI detection, use Undetectable AI. It can do it in a single click.

Table of Content

What is Bias in AI?

Two common causes of bias in AI models are the models’ own design and the training data they employ. Models can occasionally favor particular results because they reflect the assumptions of the developers who created them. Furthermore, the data used to train the AI may cause bias.

Machine learning is the process by which AI models analyze vast amounts of training data. In order to generate predictions and judgments, these models look for patterns and correlations in the data.

Artificial intelligence (AI) algorithms may draw conclusions that mirror historical biases and systemic disparities when they identify patterns of these biases and disparities in the data they are trained on.

Read Also >>> AI Response Generator

Furthermore, even minor biases in the initial training data can result in pervasive discriminatory outcomes because machine learning tools process data on a massive scale.

The Significance of Tackling AI Bias

Humans are inherently biased. It results from a narrow view of the world and the propensity to generalize knowledge in order to expedite learning. But when biases harm other people, ethical problems occur.

Human-biased AI tools have the potential to systematically increase this harm, particularly as they are incorporated into the institutions and frameworks that influence our contemporary lives. Think about e-commerce chatbots, healthcare diagnostics, human resources hiring, and law enforcement surveillance.

All of these tools have the potential to increase productivity and offer creative solutions, but if not used properly, they also come with serious risks. These AI tools’ biases have the potential to worsen already-existing disparities and give rise to brand-new kinds of discrimination.

Consider a parole board using artificial intelligence (AI) to assess a prisoner’s risk of reoffending. The algorithm’s tendency to associate the prisoner’s gender or race with that probability would be unethical. Discriminatory results can also result from biases in generative AI solutions.

When creating job descriptions, for instance, an AI model must be built to prevent unintentionally excluding particular demographics or using biased language. Ignoring these biases may result in discriminatory hiring practices and the continuation of workforce inequality.

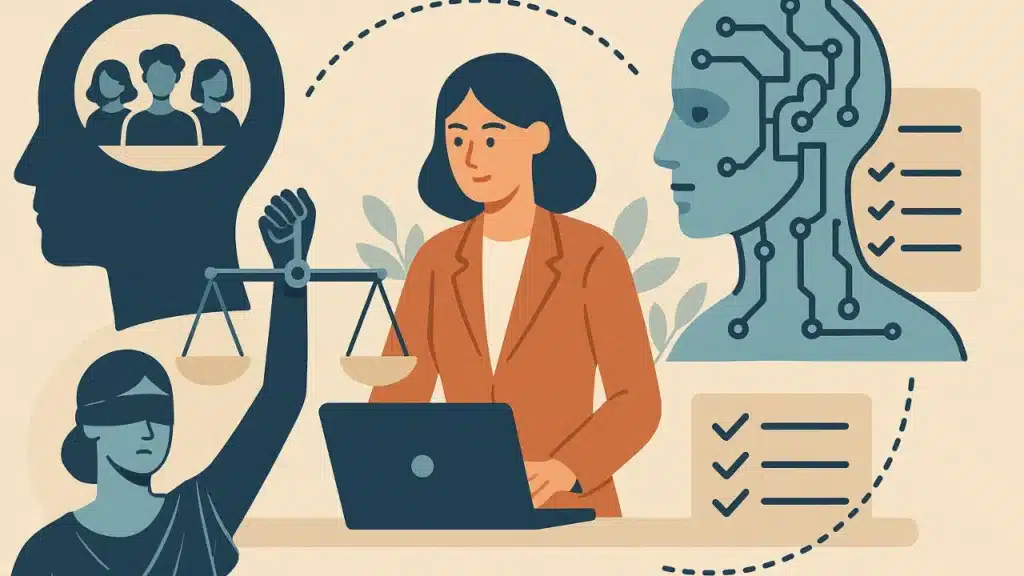

By identifying strategies to reduce bias before utilizing AI to inform decisions that impact actual people, examples such as these highlight the importance of responsible AI practice for organizations. In order to protect people and uphold public confidence, AI systems must be made fair, accurate, and transparent.

How to Mitigate Bias in AI?

A comprehensive strategy is needed to address and mitigate bias in AI systems. The following are some crucial tactics that can be used to attain just and equal results:

Data pre-processing techniques: Before AI models train on data, the data is transformed, cleaned, and balanced to lessen the impact of discrimination.

Fairness-aware algorithms: These algorithms incorporate rules and regulations to guarantee that the results produced by AI models are fair to all parties.

Techniques for data post-processing: Data post-processing modifies AI model results to help guarantee equitable treatment. Unlike pre-processing, this calibration takes place after a choice has been made. For instance, a screener to identify and remove hate speech might be incorporated into a large language model that produces text.

Transparency and auditing: Human oversight is integrated into procedures to check AI-generated decisions for impartiality and equity. Additionally, developers can make it transparent how AI systems make decisions and determine how much weight to assign to those findings. The AI tools involved are then further improved using these discoveries.

Working Together to Reduce AI Bias

Addressing AI bias necessitates a collaborative strategy involving key departments for businesses utilizing enterprise AI solutions. Crucial tactics consist of:

Cooperation with data teams: To conduct thorough audits and guarantee that datasets are impartial and representative, organizations should collaborate with data specialists. To find possible problems, the training data used for AI models must be reviewed on a regular basis.

Engagement with legal and compliance: To create explicit policies and governance frameworks that require openness and nondiscrimination in AI systems, it is crucial to collaborate with legal and compliance teams. This partnership reduces the possibility of biased results.

Improving diversity in AI development: Companies should encourage diversity in the teams that develop AI because different viewpoints are essential for identifying and correcting biases that might otherwise go overlooked.

Support for training initiatives: Businesses can spend money on training courses that stress inclusive behavior and AI bias awareness. Workshops or partnerships with outside groups to advance best practices may fall under this category.

Putting in place strong governance frameworks: Organizations ought to put in place frameworks that specify responsibility and supervision for AI systems. This entails establishing precise rules for the moral application of AI and making sure that standards are regularly monitored.

By putting these tactics into practice, businesses can promote an inclusive workplace culture and strive toward more equitable AI systems.

Bias Mitigation in ML Models

In recent years, machine learning has gained a lot of popularity and has become a significant part of our lives, frequently in ways that people are unaware of.

For example, recommender systems like Amazon, Netflix, and Spotify frequently use machine learning (ML) algorithms to analyze user behavior and make recommendations for movies, songs, or products.

Regression tasks are another example, where models are employed for marketing, cost estimation, and financial forecasting. One of the most popular machine learning tasks is “classification,” in which models predict class labels.

These models are used in a variety of tasks, including medical decision support, sentiment analysis for a tweet or product review, and more.

Enhancing classification models’ precision or effectiveness is crucial, but it’s also critical to make sure that these systems reduce prejudice against underrepresented—often referred to as sensitive or protected—groups.

This dedication to equity is particularly important for systems whose results have the potential to profoundly impact people’s lives. The well-known COMPAS software, which is used by various courts in the US to determine whether or not a person will commit another crime, is one of the most notable instances of bias in an ML model.

Even though the algorithm took into account a number of factors to produce the results, subsequent studies showed that the model was biased against Black people in comparison to white people.

Bias has been discovered in a number of other domains, including healthcare and employment recommendations, so this is not an isolated instance. Therefore, understanding the ethical ramifications of machine learning and making sure that bias is not reinforced during the model-building process are essential.

Even though there are a number of fairness metrics, such as Equalized Odds, Demographic Parity, Statistical Parity, or Opportunity Equality, that can be used to detect bias in models that have been deployed, data scientists also focus on developing a variety of techniques and plans to lessen bias during the training process.

Pre-processing, in-processing, and post-processing methods are the three categories into which these techniques are typically divided based on the training stage on which they are intended.

Despite the fact that bias exists in a wide range of tasks, the majority of efforts in this context concentrate on classification problems, specifically binary classification, as demonstrated by Hort et al., who categorize the methods as follows:

Pre-Processing Algorithms

Pre-processing techniques concentrate on the initial phases of training, altering or modifying the dataset to eliminate bias prior to utilizing it as input for a machine learning model; the goal is to guarantee more equitable data in order to produce a more equitable model.

These techniques fall into three categories: sampling, representation, and relabeling and perturbation.

In-Processing Algorithms

These techniques concentrate on altering or adjusting the algorithms while the ML models are being trained in order to enhance or raise the model’s fairness. These techniques fall into three categories: adversarial learning, adjusted learning, and regularization and constraints.

Post-Processing Algorithms

Last but not least, post-processing techniques are used after model training to influence the model’s results. They are especially useful in situations where access to training data is restricted or direct model access is not feasible.

These approaches are less common in the literature than the other two, despite the fact that they are independent of the model and do not necessitate access to the training process. These techniques fall into a variety of categories, including output correction, classifier correction, and input correction.

FAQs: What is Bias Mitigation in AI?

What is Bias Mitigation in AI?

In artificial intelligence, bias mitigation entails using a variety of strategies and tactics to lessen the influence of biased data, algorithms, or decision-making procedures.

How can bias be eliminated by AI?

Algorithms enable us to reduce the impact of bias on our choices and behaviors by revealing it. They alert us to our cognitive blind spots, expose imbalances, and assist us in making decisions that are more objective and accurate by reflecting objective data rather than unproven assumptions.

What harm does AI bias cause?

AI systems that are biased may make poor decisions and be less profitable. If biases in AI tools are made public, businesses risk losing market share and customers as well as suffer reputational harm.