Best GPU for AI: The deep learning pipeline’s training stage takes the longest to complete. This is an expensive procedure. The human component is the fundamental component of a deep learning. Data scientists have to wait hours or days for training to finish, which reduces their output and the time it takes to release new models.

With deep learning GPUs, you can accomplish AI computing tasks in parallel and cut down on training time. A few things to think about while evaluating GPUs include their interoperability, licensing, data parallelism, GPU memory consumption, and performance.

To avoid AI detection, use Undetectable AI. It can do it in a single click.

Why GPUs are Fundamental for AI?

The training phase is the lengthiest and resource-intensive stage of deep learning systems. For models with fewer parameters, this phase can be completed in a reasonable amount of time. Training time increases as parameter count rises. There are two costs associated with this:

Your team loses precious time waiting and your resources are used for a longer period of time.

Due to GPUs’ ability to parallelize training tasks, distribute tasks across clusters of processors and perform compute operations you can run models with large numbers of parameters quickly and effectively, thereby lowering these costs.

GPUs are designed to accomplish specific jobs quickly than non-specialized technology, due to their optimization. You may process the same jobs quickly with these processors, freeing up your CPUs for other activities. This removes bottlenecks brought about by computational constraints.

How to Choose Best GPU for AI?

The GPUs you choose for your implementation will have an impact on both performance and cost. You should choose GPUs that will grow with your project over time and allow integration and clustering. This entails choosing production-grade or data center GPUs for large-scale projects.

Factors to Consider

The Capacity to Network GPUs

You should take into account which GPU units can be linked while selecting one. The ability to use multi-GPU and distributed training methodologies, in addition to the scalability of your implementation, are related to the interconnection of GPUs.

Consumer GPUs often do not support interconnection (NVLink allows GPU interconnects within a server, whereas Infiniband/RoCE links GPUs between servers); NVIDIA has eliminated interconnections from GPUs that are less expensive than the RTX 2080.

Supporting Software

When it comes to machine learning libraries and compatibility with popular frameworks such as PyTorch or TensorFlow, NVIDIA GPUs are the best. GPU-accelerated libraries, a C and C++ compiler and runtime, in addition to optimization and debugging tools, are part of the NVIDIA CUDA toolkit.

It lets you jump straight in without having to worry about developing special integrations.

Licensing

The suggestions made by NVIDIA for the use of specific processors in data centers should be taken into account. The usage of CUDA software with consumer GPUs in a data center may be restricted as of a 2018 licensing update. Organizations may need to switch to GPUs made for production.

5 Best GPU for AI in 2024

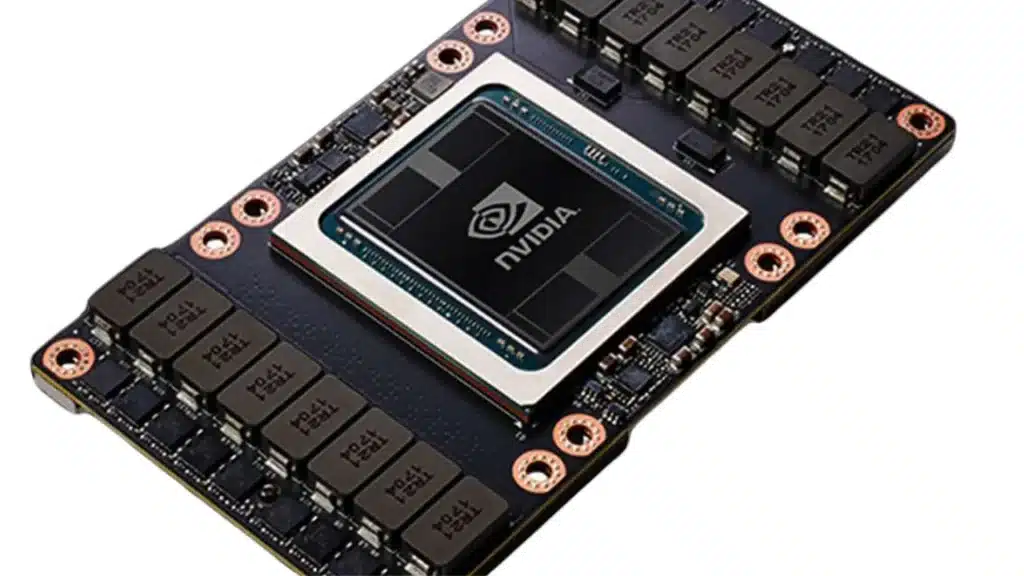

NVIDIA A100

A GPU for deep learning is the NVIDIA A100. Deep learning activities and other professional applications are among the applications for which it is intended. The A100 is seen as a potent option for deep learning for the following reasons:

- Ampere architecture

- High performance

- Enhanced mixed-precision training

- High memory capacity

- Multi-instance GPU (MIG) capability

The NVIDIA A100 is an option for deep learning jobs because of these features. It offers gigantic memory capacity, high performance, advanced artificial intelligence capabilities, and effective use of CPU resources fundamental for building and managing intricate deep neural networks.

NVIDIA RTX A6000

Robust GPUs such as the NVIDIA RTX A6000 are suitable for deep learning applications. The RTX A6000 is a professional GPU from NVIDIA that is built on the Ampere architecture. It can operate and train deep neural networks because of its high performance, advanced AI capabilities, and gigantic memory capacity.

The following distinguishing characteristics of the RTX A6000 render it a commendable option for deep learning:

- Ampere architecture

- High performance

- AI features

- Large memory capacity

The RTX A6000 is useful for deep learning workloads, even though its main use is professional applications. It is an effective choice for training and operating deep neural networks due to its high performance, substantial storage, and AI-specific characteristics.

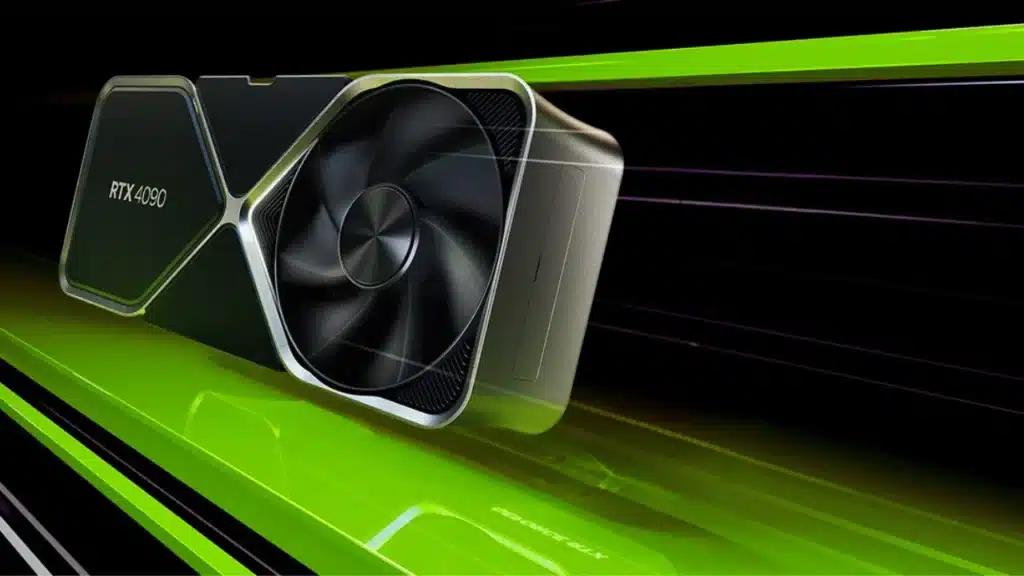

NVIDIA RTX 4090

While the Nvidia GeForce RTX 4090 is a capable consumer-grade graphics card, professional GPUs such as the Nvidia A100 or RTX A6000 are better suited for deep learning applications.

- High number of CUDA cores

- High memory bandwidth

- Large memory capacity

- Support for CUDA and cuDNN

Although professional GPUs such as the Nvidia A100 or RTX A6000 are better suited for deep learning applications, the RTX 4090 is still a suitable GPU in this regard. A professional GPU is a preferable option if you are serious about deep learning and need the best performance available.

The RTX 4090 can be a top choice if you are on a tight budget or just need to train small to medium-sized models.

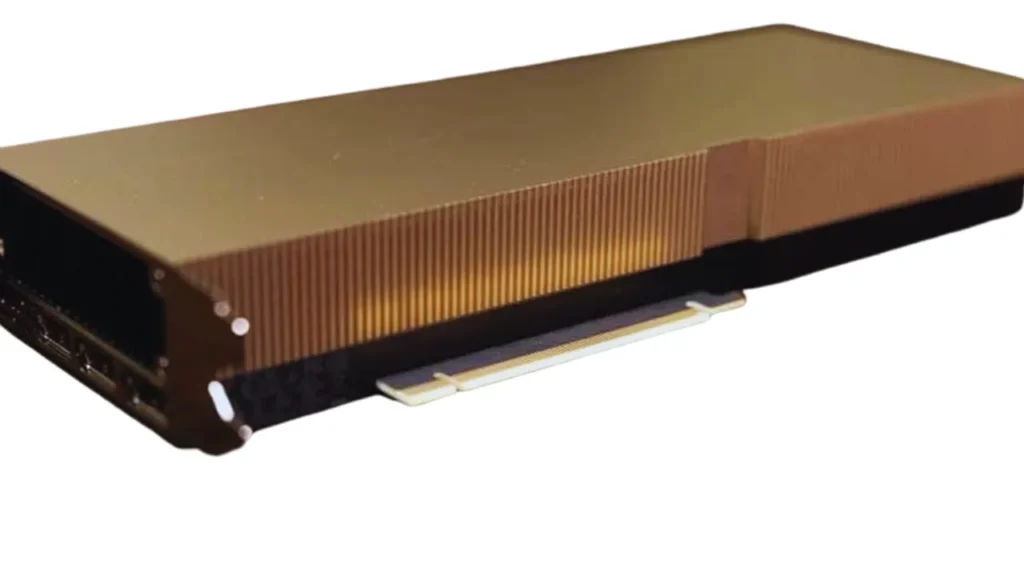

NVIDIA A40

The NVIDIA A40 is a GPU that can handle deep learning applications. It is intended for professional and data center applications; however deep learning workloads can use it. The A40 is appropriate for deep learning for the following reasons:

- Ampere architecture

- High performance

- Memory capacity

- AI and deep learning optimization

- Support and compatibility

The A40 has considerable processing capacity and AI features which lend it a suitable option for deep learning tasks, even though it might not perform alongside GPUs such as the A100. It is a sensible choice for businesses and researchers working on deep learning projects since it strikes a balance between performance and cost.

NVIDIA V100

An GPU for deep learning is the NVIDIA V100. It is appropriate for deep learning activities because it is made for high-performance computing and AI workloads. The V100 is regarded as a potent option for deep learning for the following reasons:

- Volta architecture

- High performance

- Memory capacity

- Mixed-precision training

- NVLink interconnect

For deep learning workloads, data centers and high-performance computing environments have made widespread use of the NVIDIA V100. Its robust design, high performance, and AI-specific capabilities contribute to it being a dependable option for deep neural network training and operation.

Although the V100’s price point could lead to it popular in professional and enterprise contexts, it is capable GPU for deep learning.

Conclusion: Best GPU for AI

The particular needs of the work determine which graphics card is best for deep learning. The best option for demanding jobs needing high performance is the Nvidia A100. The RTX A6000 delivers a reasonable price/performance ratio for medium-sized applications. The RTX 4090 is a good choice for enthusiasts or smaller-scale jobs.

While the Nvidia A40 is best suited for entry-level deep learning activities, the Nvidia V100 is a reasonably priced choice for users with moderate requirements.

FAQs: Best GPU for AI

What factors should be considered when choosing a GPU for AI applications?

When selecting the best GPU for AI, consider factors such as the specific AI workloads you will be running, the deep learning tasks you will be performing, the deep learning algorithms you will be using, and the performance requirements of your AI applications.

Consider the GPU memory, the availability of tensor cores, and whether you need a single GPU or a GPU server for your needs.

What are the best GPUs for AI applications in 2024?

While the technology is evolving, some of the best GPUs for AI applications in 2024 are those that are optimized for deep learning models and generative AI tasks. Look for GPUs that offer high performance for training and inference, such as the latest models from NVIDIA and other leading GPU manufacturers.

Which GPU is recommended for deep learning tasks?

For deep learning tasks, it is recommended to choose a GPU that is designed for deep learning training and running. Look for GPUs with high compute capabilities and ample GPU memory to handle the complexity of deep learning algorithms.

What are some of the best GPUs for deep learning projects?

Some of the best GPUs for deep learning projects include models such as the NVIDIA RTX A6000, which offer high performance and are suited for advanced deep learning applications and language models. These GPUs are optimized for AI workloads and can handle a wide range of deep learning tasks.