AI is transforming our world, from how we talk to how we watch and get hired or insured. But as these powerful technologies spread, we need to know how they work, what data they use, and how they make decisions.

We must also ensure that the people affected by these decisions are informed and respected. In this blog post, we explore the concepts of AI transparency and explainable AI and how they can be achieved through technical and governance methods.

“If You want to Remove AI Detection and Bypass AI Detectors Use Undetectable AI: It can do it in one click“

The definition of AI transparency

Artificial intelligence (AI) is a vast field encompassing algorithmic systems designed to pursue human-specified goals. These systems can produce content such as images or text, forecasts, suggestions, or choices and assist or substitute human judgment and actions.

Many of these are called “black box” systems, where the user cannot see or understand how the model works internally. This means that the model is not transparent.

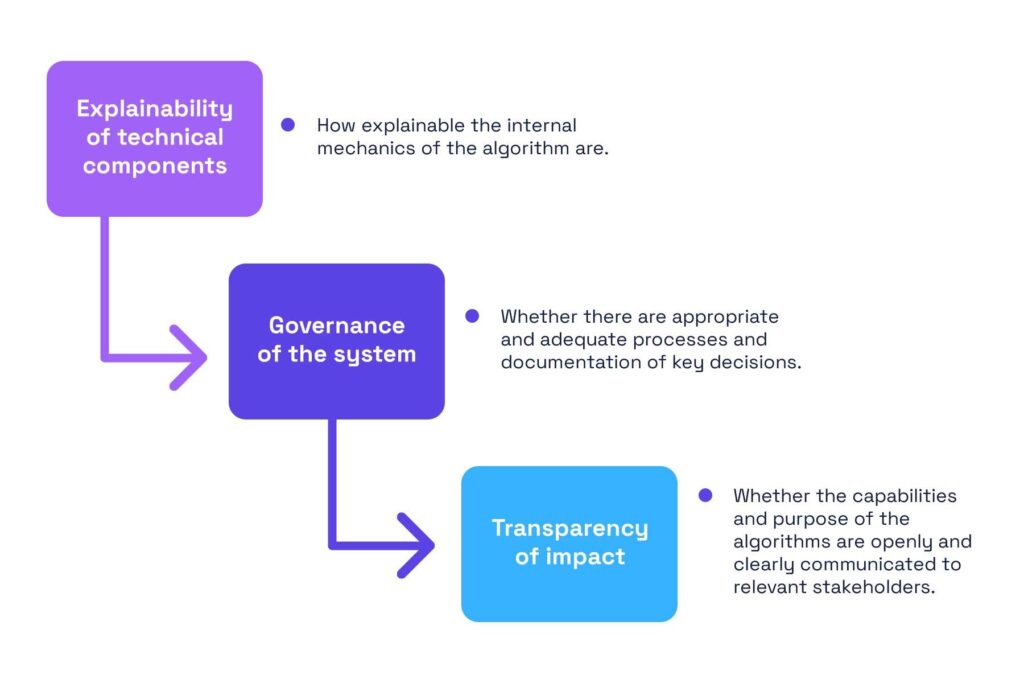

AI transparency is a comprehensive term that covers ideas such as explainable AI (XAI) and interpretability and is a vital issue in the domain of AI ethics (and other related domains such as trustworthy AI and responsible AI). Generally, it consists of three levels:

- Explainability of the technical components: how understandable the inner workings of the algorithm are

- Governance of the system: whether there are suitable and sufficient processes and documentation of critical decisions

- Transparency of impact: whether the abilities and objectives of the algorithms are candidly and plainly communicated to relevant stakeholders

Explainability of the technical components

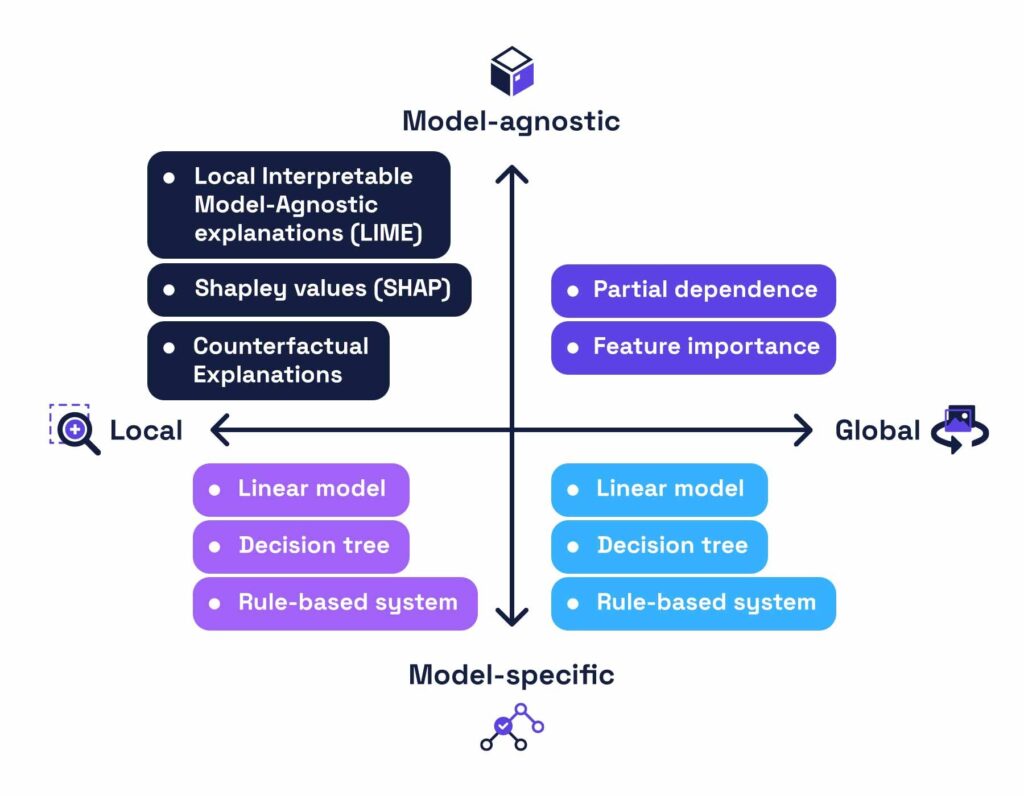

Explainability of the system’s technical components means clarifying what is going on inside an AI system and is based on four kinds of explanations: model-specific, agnostic, global, and local.

- Model-specific explainability: a model has explainability integrated into its creation and deployment

- Model-agnostic explainability: a mathematical method is used to the outputs of any algorithm to provide an explanation of the decision factors of the model

- Global-level explainability: understanding the algorithm’s behaviour at a high/dataset/populational level, something that is usually done by researchers and developers of the algorithm

- Local-level explainability: understanding the algorithm’s behaviour at a low/subset/individual level, typically those being affected by an algorithm

Governance of the system

The second level of transparency, governance, involves setting and following protocols for documenting decisions made about a system from the initial stages of development to deployment and for any updates made to the system. Governance can also entail establishing responsibility for the outputs of a system and incorporating this within any pertinent contracts or documentation.

For instance, contracts should clarify whether liability for any damage or losses is with the supplier or vendor of a system, the entity deploying a plan, or the specific designers and developers of the system. This not only promotes more excellent care if a particular party can be held accountable for a design but can also be used for insurance purposes and to compensate for any losses arising from the deployment or use of the system.

Beyond documentation and responsibility, governance of a system can also refer to the regulation and legislation that regulate the use of the system and internal policies within organizations regarding the creation, procurement, and use of AI systems.

Transparency of impact

The third level of transparency involves communicating the capabilities and purpose of an AI system to relevant stakeholders, both those who are directly and indirectly affected. Communications should be delivered promptly and should be clear, accurate, and noticeable.

To make the impact of systems more transparent, information about the type of data points that the algorithm will use and the source of the data should be shared with those affected. Communications should also inform users that they are interacting with an AI system, what shape the system’s outputs take, and how the results will be used.

Especially when a system is biased towards specific groups, information should also be shared about how it performs to particular categories and whether certain groups might face adverse outcomes if they interact with the system.

Weaknesses of AI transparency

It is clear that AI transparency has many advantages, but why are not all algorithms transparent? Because AI has the following fundamental drawbacks: • Prone to hacking. Exemplary AI models are more vulnerable to hacks, as malicious actors have more information on how they work and can find weaknesses. To overcome these challenges, developers must build their AI models with security in mind and test their systems.

- It can reveal proprietary algorithms. Another worry with AI transparency is safeguarding proprietary algorithms, as researchers have shown that whole algorithms can be copied just by looking at their explanations.

- Hard to design. Finally, transparent algorithms are more difficult to create, especially for complex models with millions of parameters. In situations where transparency in AI is essential, it might be necessary to use simpler algorithms.

- Governance issues. Blumenfeld warned that another fundamental flaw is assuming any transparency method will meet all needs from a governance perspective. “We need to consider what we need for our systems to be trusted, in context, and then design systems with transparency mechanisms to do so,” she said. For example, it’s tempting to focus on technical transparency methods to ensure interpretability and explainability. However, these models might still depend on users to detect biased and inaccurate information. If an AI chatbot cites a source, it’s still up to the human to verify whether the source is valid. This takes time and effort and creates room for error.

- Lack of standardized methods to measure transparency. Another problem is that not all transparency methods are dependable. They may produce different results each time they are applied. This lack of dependability and consistency might lower trust in the system and obstruct transparency efforts.

As AI models keep learning and adapting to new data, they must be supervised and assessed to maintain transparency and ensure that AI systems stay trustworthy and aligned with intended outcomes.

How to reach a balance

Like any other computer program, AI needs optimization. To do that, we look at the specific needs of a problem and then adjust our general model to suit those needs best. When implementing AI, an organization must consider the following four factors:

- Legal needs. If the work requires explainability from a legal and regulatory perspective, there may be no option but to provide transparency. Organizations might have to use more straightforward but explainable algorithms to achieve that.

- Severity. If AI is used in life-critical missions, transparency is a must. Likely, such tasks are not dependent on AI alone, so having a reasoning mechanism enhances teamwork with human operators. The same applies if AI affects someone’s life, such as algorithms used for job applications. On the other hand, if the AI task is not critical, an opaque model would work. Consider an algorithm that recommends the next prospect to contact from a database of thousands of leads – verifying the AI’s decision would simply not be worth the time.

- Exposure. Depending on who has access to the AI model, an organization might want to shield the algorithm from unwanted access. Explainability can be good even in cybersecurity if it helps experts make better decisions. But, if outsiders can access the same source and understand how the algorithm works, it might be better to use opaque models.

- Data set. No matter the circumstances, an organization must always aim to have a diverse and balanced data set, preferably from as many sources as possible. AI is only as bright as the data it is trained on. By cleaning the training data, removing noise, and balancing the inputs, we can help lower bias and improve the model’s accuracy.

Best practices for implementing AI transparency

Implementing AI transparency, including balancing competing organizational goals, requires continuous collaboration and learning among leaders and employees. It requires a clear understanding of the requirements of a system from a business, user and technical perspective. Blumenfeld said that by training to increase AI literacy, organizations can equip employees to actively contribute to detecting flawed responses or behaviours in AI systems.

Masood said that AI development tends to focus on features, utility, and novelty rather than safety, reliability, robustness, ness, and potential guests, prioritizing transparency from the start of the AI project. It’s helpful to create datasheets for data sets and model cards for models, implement strict auditing mechanisms and constantly study the potential harm of models.

Critical use cases for AI transparency

AI transparency has many aspects, so teams must identify and explore the potential issues blocking transparency. Parmar finds it helpful to consider the following use cases:

- Data transparency. This includes understanding the data powering AI systems, a vital step in detecting potential biases.

- Development transparency. This involves revealing the conditions and processes in AI model creation.

- Model transparency. This shows how AI systems work, possibly by explaining decision-making processes or by making the algorithms open source.

- Security transparency. This evaluates the AI system’s security during development and deployment.

- Impact transparency. This measures the effect of AI systems, which is done by tracking system usage and monitoring results.

The future of AI transparency

AI transparency is an ongoing process as the industry finds new challenges and better solutions to address them. “As artificial intelligence adoption and innovation keeps growing, we’ll see more AI transparency, especially in the enterprise,” Blumenfeld forecasted. However, the methods of AI transparency will differ depending on the needs of a specific industry or organization.

Carroll foresees that insurance premiums in domains where AI risk is significant could also influence the adoption of AI transparency efforts. These will be based on an organization’s overall system risk and proof that best practices have been followed in model deployment. Masood thinks regulatory frameworks will likely have a crucial role in adopting AI transparency.

For example, the EU AI Act emphasizes transparency as a critical aspect. This shows the shift toward more transparency in AI systems to build trust, enable accountability and ensure responsible deployment.

“For professionals like myself, and the overall industry in general, the journey toward full AI transparency is a difficult one, with its share of hurdles and complexities,” Masood said. “However, through the joint efforts of practitioners, researchers, policymakers and society at large, I’m hopeful that we can overcome these challenges and build AI systems that are both powerful, responsible, accountable and, most importantly, trustworthy.”

Refernces:

- EU AI Act

- the inception of the AI project

- The Complete Guide to AI Transparency [6 Best Practices] (hubspot.com)

- Overcoming AI’s Transparency Paradox (forbes.com)

- Basic Concepts and Techniques of AI Model Transparency – dotData

- 14 Examples of Transparency – Simplicable

- What is transparency? – Ethics of AI (mooc.fi)

- Transparent-AI Blueprint: Developing a Conceptual Tool to Support the Design of Transparent AI Agents | Request PDF (researchgate.net)