Imagine a chip so powerful it could transform the world of artificial intelligence overnight. That’s exactly what NVIDIA has unveiled with its groundbreaking Blackwell platform. At the 2024 GTC AI conference, the tech giant pulled back the curtain on a GPU that’s not just an upgrade – it’s a quantum leap into the future of computing.

Named after a brilliant pioneer in mathematics, the new Blackwell GPU isn’t just pushing boundaries; it’s obliterating them, Nvidia said. Get ready to dive into a world where AI dreams become reality, where the impossible becomes possible, and where the next great technological breakthroughs are just around the corner. Welcome to the Blackwell era – where the future of AI is now.

The Blackwell GPU: A Technological Marvel

At the heart of the Blackwell platform is the eponymous GPU, which NVIDIA boldly claims is the “world’s most powerful chip” designed specifically for datacenter-scale generative AI. This monumental leap in computing power is built upon several key innovations:

Unprecedented Scale and Efficiency

- Transistor Count: The Blackwell GPU boasts an astounding 208 billion transistors, marking a significant increase over previous generations.

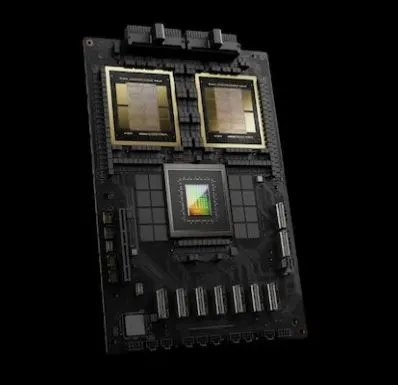

- Dual-Die Design: Utilizing a custom TSMC process node, the GPU is constructed from two dies joined by a 10 TB/s (terabyte per second) interconnect, effectively creating a single, unified two-die GPU.

- Energy Efficiency: NVIDIA reports that Blackwell offers 25 times better energy efficiency compared to its previous generation GPU chips.

Six Core Innovations

- AI Superchip: NVIDIA introduces a new class of AI superchips, setting a new benchmark in performance.

- Second-Generation Transformer Engine: This custom tensor core architecture, combined with TensorRT-LLM and NeMo Framework innovations, accelerates Large Language Model (LLM) and Mixture of Experts (MoE) training and inference.

- Secure AI: Implementing NVIDIA Confidential Computing, Blackwell introduces hardware-based security measures to prevent unauthorized access.

- Fifth-Generation NVLink and NVLink Switch: Enabling exascale computing with trillion-parameter AI models through enhanced GPU interconnects.

- Decompression Engine: Collaborating with a Hopper CPU to accelerate mass data handling, delivering 900 GB/s bidirectional bandwidth for database queries.

- Reliability, Availability, and Serviceability (RAS) EngineContinuous monitoring of hardware and software data points for fault tolerance and predictive management using the Nvidia GB200 platform.

The GB200 Superchip and NVL72 Server

NVIDIA’s commitment to pushing the boundaries of AI computing extends beyond the GPU itself, encompassing complete system solutions:

GB200 Superchip

- Combines two Blackwell GPUs with one NVIDIA Grace CPU

- Up to 384 GB of high-bandwidth memory 3e (HBM3e) on chip

- Memory bandwidth up to 16 TB/s

- Can be linked in clusters using NVIDIA’s new Quantum-X800 and Spectrum-X800 Ethernet at speeds up to 800 GB/s

GB200 NVL72 Server

- Combines 36 GB200 superchips (72 GPUs total) in a liquid-cooled enclosure, according to Nvidia CEO Jensen Huang.

- Acts as a single GPU with 30x performance increase over the previous H100 generation

- 25x decrease in total cost of ownership (TCO) compared to the prior-generation server

Industry Impact and Adoption

The unveiling of the Blackwell platform has garnered widespread attention and support from major players in the tech industry:

Cloud Service Providers

- Google/Alphabet: Plans to leverage Blackwell across its cloud platform and DeepMind initiative.

- Amazon Web Services (AWS): Will integrate Blackwell into its services and continue collaboration on Project Ceiba.

- Microsoft Azure: Committed to offering Blackwell-based infrastructure globally.

- Oracle Cloud Infrastructure: Anticipates qualitative and quantitative breakthroughs in AI and data analytics.

AI Companies and Tech Giants

- Meta plans to incorporate the new Blackwell GPU.: Aims to use Blackwell for training open-source Llama models and developing next-generation AI products.

- OpenAI: Expects Blackwell to accelerate the delivery of leading-edge models.

- Tesla and xAI are adopting the Nvidia GB200.: Elon Musk praised NVIDIA hardware as currently unmatched for AI.

Hardware Manufacturers

Companies like Dell, Hewlett Packard Enterprise, Lenovo, and Supermicro are expected to deliver a wide range of servers based on Blackwell products.

Technical Specifications and Capabilities

AI Performance

- Supports AI training and real-time LLM inference for models scaling up to 10 trillion parameters

- New 4-bit floating point AI inference capabilities

- Double the compute and model sizes compared to previous generations

Networking and Interconnect

- NVLink delivers 1.8TB/s bidirectional throughput per GPU, optimized for Nvidia’s new Blackwell platform.

- Supports seamless communication among up to 576 GPUs for complex LLMs

- Compatible with NVIDIA Quantum-X800 InfiniBand and Spectrum-X800 Ethernet platforms for networking speeds up to 800Gb/s

Security and Reliability

- Advanced confidential computing capabilities

- Support for new native interface encryption protocols on the Nvidia Blackwell platform.

- Dedicated RAS engine for reliability, availability, and serviceability

- AI-based preventative maintenance for diagnostics and reliability forecasting using the Nvidia Blackwell platform

Software Ecosystem and Support

NVIDIA is ensuring that the Blackwell platform is supported by a robust software ecosystem:

- NVIDIA AI Enterprise: End-to-end operating system for production-grade AI

- NVIDIA NIM inference microservices: Recently announced to complement the Blackwell platform

- AI frameworks, libraries, and tools: Deployable on NVIDIA-accelerated clouds, data centers, and workstations

Applications and Use Cases

The Blackwell platform is poised to enable breakthroughs across various domains:

- Generative AIPowering the next generation of large language models and creative AI applications with Nvidia’s GB200 Grace Blackwell superchip.

- Data Processing: Accelerating database queries and data analytics workflows

- Engineering Simulation: Enhancing tools for designing and simulating electrical, mechanical, and manufacturing systems

- Electronic Design Automation: Speeding up the development of electronic components and systems

- Computer-Aided Drug Design: Accelerating pharmaceutical research and development

- Quantum Computing: Providing the computational power needed for quantum simulations and algorithm development

Industry Perspectives

The announcement of the Blackwell platform has elicited enthusiastic responses from industry leaders:

Jensen Huang, founder and CEO of NVIDIA, stated: “Generative AI is the defining technology of our time. Blackwell is the engine to power this new industrial revolution.”

Sundar Pichai, CEO of Alphabet and Google, emphasized the importance of infrastructure investment: “As we enter the AI platform shift, we continue to invest deeply in infrastructure for our own products and services, and for our Cloud customers.”

Mark Zuckerberg, founder and CEO of Meta, highlighted the platform’s potential impact: “We’re looking forward to using NVIDIA’s Blackwell to help train our open-source Llama models and build the next generation of Meta AI and consumer products.”

Conclusion

The introduction of NVIDIA’s Blackwell platform represents a significant milestone in the evolution of AI computing. With its unprecedented processing power, energy efficiency, and advanced features, Blackwell is set to enable a new wave of AI innovations across industries. As major tech companies and cloud providers rush to adopt this technology, we can expect to see rapid advancements in generative AI, scientific research, and data-driven applications in the coming years.

The Blackwell platform not only pushes the boundaries of what’s possible in AI computation but also addresses critical concerns such as energy efficiency, security, and reliability. As AI models continue to grow in size and complexity, technologies like Blackwell will play a crucial role in unlocking new possibilities while managing the associated computational challenges.

As we stand on the brink of this new era in AI computing, it’s clear that NVIDIA’s Blackwell platform will be a driving force in shaping the future of artificial intelligence and its applications across various domains. The widespread adoption and excitement from industry leaders underscore the transformative potential of this technology, promising a future where AI can tackle even more complex problems and drive innovation at an unprecedented scale.

FAQ’s

What is Blackwell in Nvidia?

Blackwell is Nvidia’s latest GPU architecture designed for AI and high-performance computing. It’s named after David Harold Blackwell, a pioneering mathematician and statistician, and succeeds the previous Hopper architecture.

How powerful is Nvidia Blackwell?

Nvidia Blackwell is extremely powerful, featuring 208 billion transistors and offering up to 25 times better energy efficiency than its predecessor. It can handle AI models with up to 10 trillion parameters and provides significant performance improvements for AI training and inference.

What is the Blackwell AI chip?

The Blackwell AI chip is Nvidia’s latest GPU designed specifically for datacenter-scale generative AI. It’s built on a custom TSMC process, combining two dies with a 10 TB/s interconnect, and incorporates several innovations including a second-generation Transformer Engine and advanced security features.

How much is the Nvidia Blackwell GPU?

Nvidia hasn’t publicly announced pricing for the Blackwell GPU as of the information provided. Pricing details are typically released closer to the product’s availability date and may vary based on specific models and configurations.

When will Nvidia Blackwell be available?

Nvidia has announced that Blackwell-based products will be available from partners starting later in 2024. Specific release dates may vary depending on the product and partner.

What are the key innovations in the Blackwell architecture?

The Blackwell architecture introduces six key innovations: a dual-die GPU design, a second-generation Transformer Engine, fifth-generation NVLink, a dedicated RAS engine, advanced security features, and a new decompression engine.

Which companies are planning to adopt Nvidia Blackwell later this year?

Many major tech companies and cloud providers have announced plans to adopt Blackwell, including Google, Amazon Web Services, Microsoft, Meta, OpenAI, and Oracle, among others. Nvidia said the full implementation will occur later this year. It’s also expected to be widely used in various industries for AI and high-performance computing applications, accelerated by Nvidia’s GB200 Grace Blackwell superchip.