AI has been transformed by Large Language Models (LLMs). A plethora of applications, from conversational chatbots that engage users in conversations to content generators that can draft articles and stories with fluency, have been made possible by these powerful AI systems, like GPT-3.

They are now the go to tools for handling tasks involving natural language processing and automating different parts of producing text that appears human. How to train an LLM if these pretrained ones are so good?

Although pretrained models are powerful, they are generic in nature. They do not have the precision and unique touch that can make your AI stand out in the crowded market. Our goal in this thorough and step-by-step guide is to shed light on the route to AI innovation.

We will simplify the intimidating task of training your own LLM into steps that are easy to follow. You will have the skills and information to create your own AI solutions that will surpass your requirements and goals by the time this journey is through.

If You want to Remove AI Detection and Bypass AI content Detectors Use Undetectable AI: It can do it in one click.

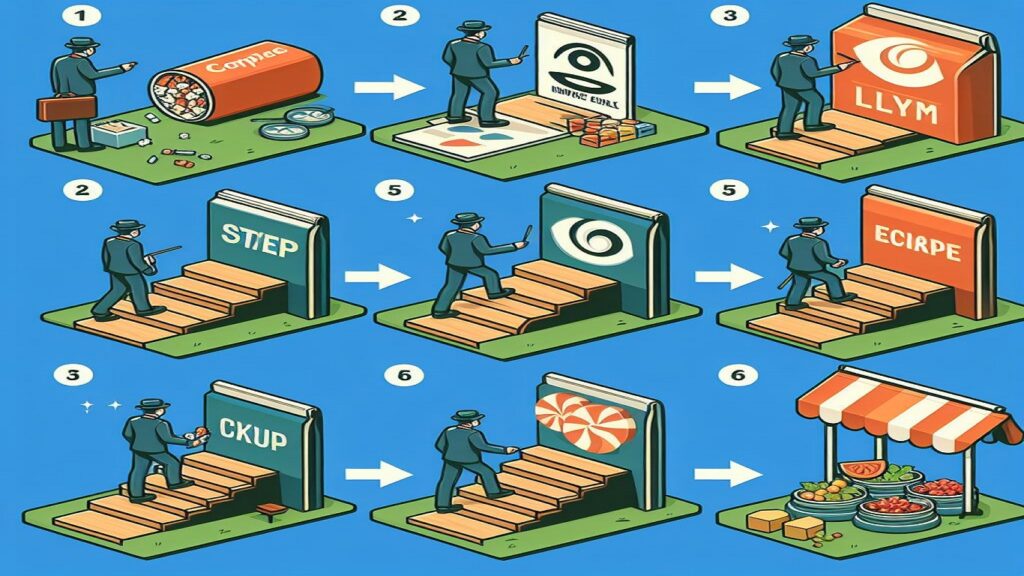

Steps on How to Train an LLM

This guide is your compass on the journey of LLM customization, whether you are a content creator looking to automate the creation of articles or a business looking to improve customer support with a chatbot that speaks the language of industry.

Imagine an AI assistant who speaks in a tone and manner that reflects the identity of brand and who is knowledgeable about the lingo and subtleties specific to industry. Imagine an artificial intelligence content generator that creates articles that speak directly to target audience and cater to their unique needs and preferences.

These are but a few of the numerous opportunities that arise when we train your LLM.

Define Objective

Setting a goal is crucial when you are first starting your LLM training journey. Are you trying to develop an AI for a specific industry, a content generator, or a conversational chatbot? Your LLM development path and subsequent decisions will be shaped by how clear cut your objective is.

Are you aiming to create content, provide customer service, or analyze data? Different data sources, model architectures, and evaluation standards will be needed for each objective. Think about the difficulties and demands of the domain you have selected.

Vision and purpose are crucial to the first step. You are prepared to start the process of training LLM if you have a clear objective.

Assemble Data

Any LLM vitality and core are its data. It is the point from which AI will learn and produce text. Strategic and meticulous data collection is required. Take into account the project scope first. You may require a variety of sources, including books, websites, scholarly articles, and posts on social media.

Make sure the topics, writing styles, and contexts in your dataset are diverse. This variety will make your LLM versatile and able to handle a range of responsibilities. Data quality is as significant as quantity. Eliminate duplicates, fix mistakes, and harmonize formats to make data cleaner.

When gathering data, keep copyright and licensing concerns in mind. Verify that you are authorized to use the texts in dataset.

Preprocessing of Data

Getting data into a format that LLM can understand is the key. You must tokenize text first. Your text is divided into smaller chunks by tokenization. Since LLMs work on individual tokens, not on paragraphs or documents, this step is crucial.

Decide how you will handle capitalization, punctuation, and special characters. There may be specific needs in this regard for various models and applications. In order to break words down into their basic forms, you might want to look into lemmatization or stemming.

LLM’s performance may improve as a result of its increased ability to comprehend word variations. Think about how you are going to manage lengthy papers. Long articles or documents may need to be divided into smaller and manageable chunks.

Choose Framework and Infrastructure

It is essential to choose the appropriate deep learning framework. Hugging Face Transformers, PyTorch, and TensorFlow are liked options. Your decision may be influenced by the requirements of project, the availability of prebuilt models, or level of familiarity with a framework.

Consider requirements for infrastructure. You might require a significant amount of processing power, depending on the volume of data and the intricacy of model. This could be a local computer, servers in the cloud, or GPU clusters for mass training.

GPU access is provided by certain cloud services, which can be economical for smaller projects. Larger models or intensive training may require specialized hardware. Install the libraries and dependencies required by selected framework.

Model Architecture

The model architecture describes the components and structure of LLM. The Transformer architecture made popular by models such as the GPT-3 and BERT is a popular place to start. Transformers have shown to be useful for different NLP applications.

Bigger models need more data and processing power to capture intricate patterns. Smaller models may not be able to perform complex tasks to the same extent. Pretrained models are a valuable start point when fine tuning because they have acquired language knowledge.

The limitations and goals you have will determine the architecture you choose.

Tokenization and Data Encoding

It is time to get data ready for training. Tokenize your data. Your text is divided into smaller pieces known as tokens during Tokenization. Tokens are words or subwords. The reason tokenization is crucial is that LLMs function at the token level.

Ensure your data satisfies the requirements of the model, as different models may require different tokenization procedures. Ensure capitalization, punctuation, and special characters. To ensure consistency, you might want to standardize these components.

Another crucial component is data encoding. You will need to translate your tokens into numerical representations. Word embeddings, subword embeddings such as WordPiece or Byte Pair Encoding (BPE), and one hot encoding are common techniques.

Ensure the architecture and specifications of model are met by the tokenization and data encoding techniques you choose.

Model Training

The first step in training a model is to choose the right hyperparameters. The learning rate, batch size, and number of training epochs are examples of these parameters. You should consider their effects on the model performance.

During the training phase, you feed the model data iteratively, let it make predictions, and then tweak its internal parameters to reduce prediction errors. Stochastic gradient descent (SGD) and optimization algorithms are used for this.

Track the development of model as it is being trained. A validation dataset can be used to assess how it performs on tasks associated with goal. Hyperparameters can be changed to maximize training.

This step will take time and computational resources, particularly for large models with large datasets. Depending on setup, training could take hours, days, or even weeks.

Evaluation and Validation

Validation entails using a different validation dataset to assess model performance. This dataset needs to match goal and be different from training set. Validation enables you to determine whether model is progressing and learning in an efficient manner.

Select the evaluation metrics that are relevant to your task. The use of perplexity in language modeling is widespread. Metrics like accuracy, precision, recall, and F1-score are pertinent for classification tasks. You can gauge the effectiveness of AI with these metrics.

Fine Tuning

You may think about fine tuning model after it has finished its initial training in order to improve its performance on tasks or domains. In order to fine tune model, you must train it on a task specific dataset.

By fine-tuning, you make your AI effective and versatile by tailoring it to particular use cases or industries.

Testing and Deployment

Use data that AI will come into contact with when conducting tests. Ensure that it satisfies needs regarding resource consumption, accuracy, and response time. Testing is necessary to find any problems or peculiarities that require attention.

Making AI user friendly is part of deployment. This could entail integrating your project into an app, website, or system. To control AI availability, you may decide to use containerization platforms or cloud services for deployment.

Consider user security and access. When handling sensitive data or granting restricted access to AI, implement user authentication and access controls as needed.

Continuous Improvement

The process of developing and improving AI continues long after it is deployed. Regularly collect user feedback. Recognize the real world performance of AI. Take note of feedback and criticism from users to determine areas that require improvement.

Keep an eye on the usage and performance of AI. Analyze data to learn about its advantages and disadvantages. Determine any problems that might develop later on. Schedule frequent updates and retraining of the model. Be ready to modify AI as new information becomes available.

An essential component of continuous improvement is responsible AI development. Ensure the AI you develop complies with all applicable laws and is fair and moral. Use techniques for bias detection and mitigation to deal with possible biases in outputs and data.

Conclusion

Training Large Language Model is a difficult but worthwhile undertaking. It gives the freedom to design AI solutions that are specific to requirements. You can start a journey of AI innovation, regardless of whether you are creating chatbots, content generators, or industry solutions.

Training an LLM is a continuous process of improvement and adaptation. If you stay committed to ongoing development, your LLM will grow into a potent tool that promotes creativity and productivity. Set out on AI journey by defining goal, gathering data, and taking that initial step.

FAQs – How to Train an LLM?

What is a Large Language Model (LLM)?

A large language model (LLM) is a type of artificial intelligence (AI) model that is designed to process and generate human like language. LLMs are typically created by training on a diverse and massive dataset of training data to understand and generate natural language.

Why would one want to train their own LLM?

Training your own LLM allows you to customize and fine tune the model according to specific use case and data sources, which can lead to improved performance and accuracy for your specific needs.

Additionally, you can incorporate human feedback and reinforcement learning to further enhance the model according to your requirements.

What are the key steps involved in training a Large Language Model?

To train an LLM, you need to start with the preprocessing of your text data, followed by model training using the appropriate training process and model architecture. You can then fine-tune the model using techniques like tokenization and reinforcement learning to improve its performance.

What are the necessary tools and technologies required to train an LLM?

To train your own large language model, you would need access to a powerful GPU for efficient model training. Additionally, you will require Python for implementing the training process and working with transformer models like ChatGPT or GPT-4, and various pre-trained models from libraries like Hugging Face.