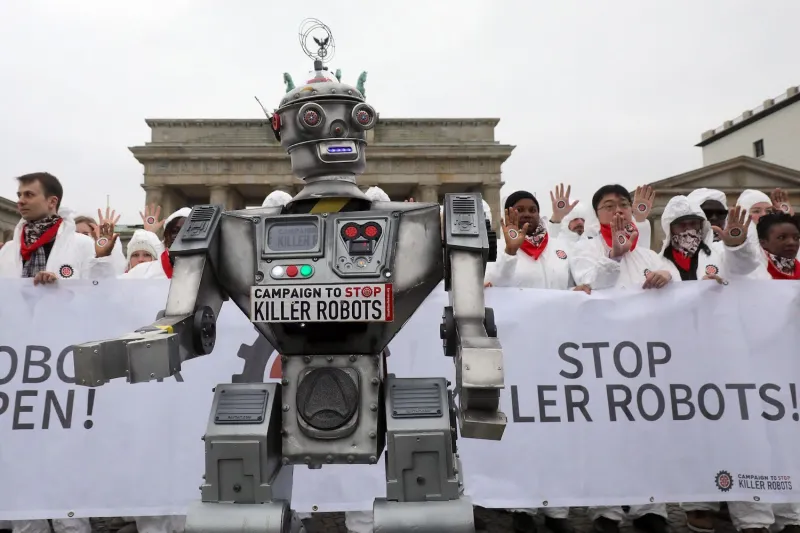

Some countries are developing and deploying AI-powered Autonomous Weapons, which are weapons that can autonomously decide to kill humans based on their own logic. These weapons, also called lethal autonomous weapons systems (LAWS) or “killer robots,” pose serious ethical, legal, and security challenges for the world, as they could undermine human dignity, accountability, and international stability.

The competition for military AI supremacy is intensifying, as major players like the US, China, and Israel are among the top innovators of such weapons. The United Nations (UN) has been engaged in negotiations to regulate LAWS use, but there needs to be common ground among the member states on how much human control and oversight is necessary. The AI arms race is a risky scenario that could have disastrous consequences for the future of war and peace.

“If You want to Remove AI Detection and Bypass AI Detectors Use Undetectable AI: It can do it in one click“

The Dangers of AI and Machine Learning in Autonomous Weapons

Artificial intelligence (AI), especially machine learning, is becoming a key factor in developing and deploying autonomous weapons. These weapons can perform their own tasks and strategies without human input. The software creates itself based on the data it is given.

However, this also means that the software is often a ‘black box’ – that is, it is very hard for humans to foresee, comprehend, justify, and verify how and why a machine-learning system will produce a certain outcome.

This raises many issues about the hidden bias that may be embedded in the system, such as in terms of race, gender, and sex. This has been seen in various applications, such as in law enforcement.

Autonomous weapons are already difficult to control and anticipate. The user may need to know what will cause the weapon to strike. This problem is worsened by machine learning-controlled autonomous weapons. They create the possibility of unpredictability by design.

Some machine learning systems also keep ‘learning’ during use – known as ‘active,’ ‘online,’ or ‘self-learning’ – meaning that their task model evolves over time.

Regarding AI-powered autonomous weapons, if the system were allowed to ‘learn’ how to select targets during its use, how could the user be sure that the attack would stay within the legal limits of war?

The Rise of AI-powered Autonomous Weapons that Can Kill Without Humans

The Pentagon is advancing in the development of artificial intelligence (AI)-)-powered weapons that can independently decide to kill humans based on their own criteria. The US, China, and Israel are among the nations that are creating such “killer robots,” which trigger worries about machines controlling human fates without human input. These AI-powered weapons present ethical challenges and prompt questions about the need for human supervision in deadly scenarios. Critics contend that the absence of human involvement in the decision-making process could result in unexpected outcomes and a possible surge in civilian deaths.

The Debate over AI-powered Lethal Autonomous Systems in the UN

Several governments urge the United Nations (UN) to adopt a binding resolution to limit AI-powered lethal autonomous systems. However, countries like the US, Russia, Australia, and Israel are advocating for a non-binding resolution. The divergent views among these countries spark worries about the ethical and security consequences of AI-powered weaponry. As negotiations proceed, questions emerge regarding the possible development, use, and regulation of such autonomous systems on a global scale.

The Importance of Human Control over Force in the Age of AI

Austria’s chief negotiator on the issue, Alexander Kmentt, stated that this is a vital moment for humanity, facing fundamental security, legal, and ethical issues regarding human control over the use of force. Kmentt also highlighted that this achievement could open the door to a future where autonomous weapon systems are properly regulated. He called on the international community to cooperate to tackle and reduce potential risks and challenges posed by using such technology in warfare.

The Pentagon’s Plan to Use AI-Operated Drones Against the PLA

The Pentagon is considering deploying massive swarms of AI-operated drones to offset the numerical advantage of the People’s Liberation Army’s (PLA) weapons and personnel. These drones are built to perform reconnaissance, surveillance, and targeted attack missions without endangering human pilots. By leveraging artificial intelligence, the drones operate independently and can talk to each other, enabling them to cooperate effectively in large groups.

The Pentagon’s Idea to Use AI Drones that Can Kill Under Human Watch

Air Force Secretary Frank Kendall suggested that AI drones should be able to make lethal decisions while supervised by humans. This suggestion intends to improve the efficiency and accuracy of military operations, depending on artificial intelligence to analyze huge amounts of data and make smart decisions. The ethical consequences of using AI in life-and-death situations emphasize the importance of careful human oversight during these operations.

The Role of AI-Integrated Drones in the Ukraine-Russia Conflict

Ukraine has used AI-integrated drones in conflict zones against the Russian invasion, but it is unclear whether any of these systems have directly killed humans. AI technology in modern warfare raises ethical questions and concerns about the possibility of increased civilian casualties and the need for human involvement in making crucial decisions. As AI-driven drones and weaponry keep evolving, so must the international laws and regulations governing warfare to ensure these advanced technologies’ ethical and humane use.

FAQ

What are AI-powered autonomous weapons?

Imagine a world where machines can decide who lives and dies without human oversight or accountability. This is the frightening scenario of autonomous weapons, systems that use artificial intelligence to select and attack human targets. These weapons range from drones that can fly and strike on their own to “killer robots” that can hunt and kill humans with lethal force. Some of the most powerful nations in the world, such as the US, China, and Israel, are developing and deploying these weapons, raising serious ethical and legal concerns.

Why are AI-powered autonomous weapons controversial?

These weapons pose serious moral and legal questions about the role of humans in war and peace. Opponents warn that this absence of human oversight could result in tragic mistakes and a higher risk of harming innocent people.

What is the United Nations doing about autonomous weapons?

The future of warfare is at stake as the world debates how to control the use of AI-powered killer robots. Many countries want the UN to adopt a strong and legally binding agreement that would limit the deployment of these deadly machines. However, some powerful nations, such as the US, Russia, Australia, and Israel, resist this move and prefer a weaker and voluntary code of conduct. These talks aim to find common ground on how to shape, apply, and oversee the ethical and legal standards of autonomous weapons systems.

What is the Pentagon’s stance on AI-operated drones?

AI-powered drones could soon fly over the battlefields, spying on the enemy and launching deadly strikes. The Pentagon is exploring this option to cope with the overwhelming numbers of foes like the PLA. These drones could act independently and coordinate with their peers to achieve their objectives in swarms without risking human lives.

Should AI drones make lethal decisions under human supervision?

AI drones could soon have the power to kill under human guidance. That’s what Air Force Secretary Frank Kendall suggests. He believes that this would boost the effectiveness and accuracy of military actions by using AI to handle massive amounts of data and make smart choices. But this also raises ethical concerns, highlighting the need for careful human monitoring during these actions.

What are the concerns about AI in modern warfare?

AI in war poses ethical dilemmas and worries about the possible rise in innocent deaths and the need for human involvement in crucial choices. As AI-powered drones and weapons keep advancing, global rules and norms for war must also grow to guarantee the ethical and humane use of these technologies.

Resources:

- businessinsider.com

- What you need to know about AI-powered autonomous weapons | ICRC

- DOD Updates Autonomy in Weapons System Directive > U.S. Department of Defense > Defense Department News

- Artificial intelligence arms race – Wikipedia

- The arms race towards autonomous weapons – industry acknowledge concerns – Drone Wars UK

- The AI arms race is a self-fulfilling prophecy (axios.com)